Iteration and Simulation

We’ve noted that functions are helpful when you repeat code more than twice; we’ve also noted that a lot of statistical methods involve doing the same thing a large number of times. Putting those together motivates a careful approach to iteratation; conducting simulations is a very relevant example.

This is the second module in the Iteration topic; the relevant slack channel is here.

Example

I’ll write code for today’s content in a new R Markdown document called iteration_and_simulation.Rmd in the example_iteration directory / repo. The code chunk below loads some of the usual packages.

library(tidyverse)

library(forcats)

library(stringr)

theme_set(theme_bw())

theme_update(legend.position = "bottom")

set.seed(1)for loops

For this example, I’m going to start with the pretty simple data frame defined below.

df = data_frame(

a = rnorm(20),

b = rnorm(20),

c = rnorm(20),

d = rnorm(20)

)I’d like to apply my simple z_score function from writing functions to each column of this dataframe. For completeness, that function is below.

z_score = function(x) {

z = mean(x) / sd(x)

z

}We can apply the z_score function to each column of df using the lines below. (Throughout this content, I’ll take advantage of the fact that data frames are a kind of list – keeping this in mind when you’re iterating is really useful).

z_score(df[[1]])

## [1] 0.208621

z_score(df[[2]])

## [1] -0.007426873

z_score(df[[3]])

## [1] 0.1714126

z_score(df[[4]])

## [1] 0.09686769But now we’ve broken our “don’t repeat code more than twice” rule! Specifically, we’ve applied the same function / operation to the elements of a list sequentially. This is exactly the kind of code repetition for loops address

Below, I define an output list with the same number of entries as my target dataframe; a sequence to iterate over; and a for loop body that applies the z_score function for each sequence element and saves the result.

output = vector("list", length = 4)

for (i in 1:4) {

output[[i]] = z_score(df[[i]])

}This is already much cleaner than using four almost-identical lines of code, and will make life easier the larger our sequence gets.

In this example, I bypassed a common first step in writing loops because I already had the function I wanted to repeat. Frequently, however, I’ll start with repeated code segements, then abstract the underlying process into a function, and then wrap things up in a for loop.

map

A criticism of for loops is that there’s a lot of overhead – you have to define your output vector / list, there’s the for loop bookkeeping to do, etc – that distracts from the purpose of the code. In this case, we want to apply z_score to each column of df, but we have to scan inside the for loop to figure that out.

The map functions in purrr try to make the purpose of your code clear. Compare the loop above to the line below.

output = map(df, z_score)The first argument to map is the vector /list (/ data frame) we want to iterate over, and the second argument is the function we want to apply to each element. The line above will produce the same output as the previous loop, but is clearer and easier to understand (once you’re used to map …).

This code (using map) is why we pointed out in writing functions that functions can be passed as arguments to other functions. The second argument in map(df, z_score) is a function we just wrote. To see how powerful this can be, suppose we wanted to apply a different function, say median, to each column of df. The chunk below includes both the loop and the map approach.

output = vector("list", length = 4)

for (i in 1:4) {

output[[i]] = median(df[[i]])

}

output = map(df, median)Again, both options produce the same output, but the map places the focus squarely on the function you want to apply by removing much of the bookkeeping.

map variants

There are some useful variants to the basic map function if you know what kind of output you’re going to produce. Below we use map_dbl because z_score outputs a single numeric value each time; the result is a vector instead of a list.

output = map_dbl(df, z_score)If we tried to use map_int or map_lgl, we’d get an error because the output of z_score isn’t a integer or a logical. This is a good way to help catch mistakes when they arise.

Let’s look at a case where the output isn’t a scalar. Our modified z_score function, which returns a data frame with the mean, standard deviation, and Z score is below.

z_score = function(x) {

if (!is.numeric(x)) {

stop("argument x should be numeric")

} else if (length(x) == 1) {

stop("z-scores cannot be computed for length 1 vectors")

}

mean_x = mean(x)

sd_x = sd(x)

z = mean_x / sd_x

data_frame(

mean = mean_x,

sd = sd_x,

z = z

)

}We can apply this version z_score to df using map; doing so will produce a list of data frames.

output = map(df, z_score)Since we know z_score produces a data frame, though, we can use the output-specific map_df; this will produce a single data frame.

output = map_df(df, z_score)The map_df variant is helpful when your map statement is part of a longer chain of piped commands.

Revisiting past examples

Scraping Amazon

In reading data from the web, we wrote code that allowed us to scrape information in Amazon reviews; in writing functions we wrapped that code into a function called read_page_reviews which, for a given url, produces a data frame containing review titles, star ratings, and text.

library(rvest)

library(stringr)

read_page_reviews <- function(url) {

h = read_html(url)

title = h %>%

html_nodes("#cm_cr-review_list .review-title") %>%

html_text()

stars = h %>%

html_nodes("#cm_cr-review_list .review-rating") %>%

html_text() %>%

str_extract("\\d") %>%

as.numeric()

text = h %>%

html_nodes(".review-data:nth-child(4)") %>%

html_text()

data_frame(title, stars, text)

}Now let’s use this function to read many pages of reviews. First I’ll define a vector of URLs to act as an input, and then I’ll iterate over that vector using both a for loop and a map_df statement.

url_base = "https://www.amazon.com/product-reviews/B00005JNBQ/ref=cm_cr_arp_d_viewopt_rvwer?ie=UTF8&reviewerType=avp_only_reviews&sortBy=recent&pageNumber="

urls = str_c(url_base, 1:10)

output = vector("list", 10)

for (i in 1:10) {

output[[i]] = read_page_reviews(urls[[1]])

}

dynamite_reviews = bind_rows(output)

dynamite_reviews = map_df(urls, read_page_reviews)As with previous examples, using a for loop is pretty okay but the map_df call is clearer.

LoTR and map2

Importing the LoTR words data is a bit trickier. In writing functions we produced a version of the function below, which reads a sub-table of the Excel file containing the data, cleans the result, and returns a tidy data frame.

lotr_load_and_tidy = function(path, range, movie_name) {

df = readxl::read_excel(path, range = range) %>%

janitor::clean_names() %>%

gather(key = sex, value = words, female:male) %>%

mutate(race = tolower(race),

movie = movie_name)

df

}In this case we can’t use map directly, because there are two arguments we need to iterate over – range and movie_name. To see this more clearly, we’ve written the complete import process using a for loop.

cell_ranges = list("B3:D6", "F3:H6", "J3:L6")

movie_names = list("Fellowship", "Two Towers", "Return")

output = vector("list", 3)

for (i in 1:3) {

output[[i]] = lotr_load_and_tidy(path = "./data/LotR_Words.xlsx",

range = cell_ranges[[i]],

movie_name = movie_names[[i]])

}

lotr_tidy = bind_rows(output)The map2 variant is handy when you have two input lists to iterate over. The implementation is similar to that for map: you supply the input lists and the function name, and map2 uses elements of the input lists as arguments to the function.

lotr_tidy = map2_df(.x = cell_ranges, .y = movie_names,

~lotr_load_and_tidy(path = "./data/LotR_Words.xlsx",

range = .x,

movie_name = .y)) That code makes sure all the details of argument mappings are clear, but shorter versions are possible.

lotr_tidy = map2_df(cell_ranges, movie_names,

lotr_load_and_tidy,

path = "./data/LotR_Words.xlsx") We can compare this to code we wrote in writing functions, when we had given the path argument a default value.

bind_rows(

lotr_load_and_tidy(cell_range = "B3:D6", movie_name = "Fellowship"),

lotr_load_and_tidy(cell_range = "F3:H6", movie_name = "Two Towers"),

lotr_load_and_tidy(cell_range = "J3:L6", movie_name = "Return")

)In this case it’s not obvious which coding approach is better – using map2_df or three function calls inside bind_rows – and that happens a lot. No matter what, though, you should be able to write code either way so you have a choice instead of being constrained by your skillset!

Simulation: SLR for one \(n\)

Last class we wrote a short function to simulate data from a simple linear regression, to fit the regression model, and to return estimates of regression coefficients. Specifically, we generate data from \[ y_i = \beta_0 + \beta_1 x_i + \epsilon_i \] for subjects \(1 \leq i \leq n\) with \(\epsilon_i \sim N[0,1]\) and return estimates \(\hat{\beta}_0, \hat{\beta}_1\). That function is below.

sim_regression = function(n, beta0, beta1) {

sim_data = tibble(

x = rnorm(n, mean = 1, sd = 1),

y = beta0 + beta1 * x + rnorm(n, 0, 1)

)

ls_fit = lm(y ~ x, data = sim_data)

tibble(

n = n,

beta0_hat = coef(ls_fit)[1],

beta1_hat = coef(ls_fit)[2]

)

}Important statistical properties of estimates \(\hat{\beta}_0, \hat{\beta}_1\) are established under the conceptual framework of repeated sampling. If you could draw from a population over and over, your estimates will have a known mean and variance: \[ \hat{\beta}_0 \sim \left[\beta_0, \sigma^2 \left(\frac{1}{n} + \frac{\bar{x}}{\sum (x_i - \bar{x})^2}\right) \right] \mbox{ and } \hat{\beta}_1 \sim \left[\beta_1,\frac{\sigma^2}{\sum (x_i - \bar{x})^2} \right] \] (Because our simulation design generates errors from a Normal distribution we also know that the estimates follow a Normal distribution, although that’s not guaranteed by least squares estimation.)

In the real world, drawing samples is time consuming and costly, so “repeated sampling” remains conceptual. On a computer, though, drawing samples is pretty easy. That makes simulation an appealing way to examine the statistical properties of your estimators.

Let’s run sim_regression() 100 times to see the effect of randomness in \(\epsilon\) on estimates \(\hat{\beta}_0, \hat{\beta}_1\).

output = vector("list", 100)

for (i in 1:100) {

output[[i]] = sim_regression(30, 2, 3)

}

sim_results = bind_rows(output)Taking a look at the for loop we used to create these results, you might notice that the sequence is used to keep track of the output but doesn’t affect the computation performed. In cases like these, the purrr::rerun function is very handy.

sim_results = rerun(100, sim_regression(30, 2, 3)) %>%

bind_rows()Structurally, rerun is a lot like map – the first argument defines the amount of iteration and the second argument is the function to use in each iteration step. As with map, we’ve replaced a for loop with a segment of code that makes our purpose much more transparent but both approaches give the same results.

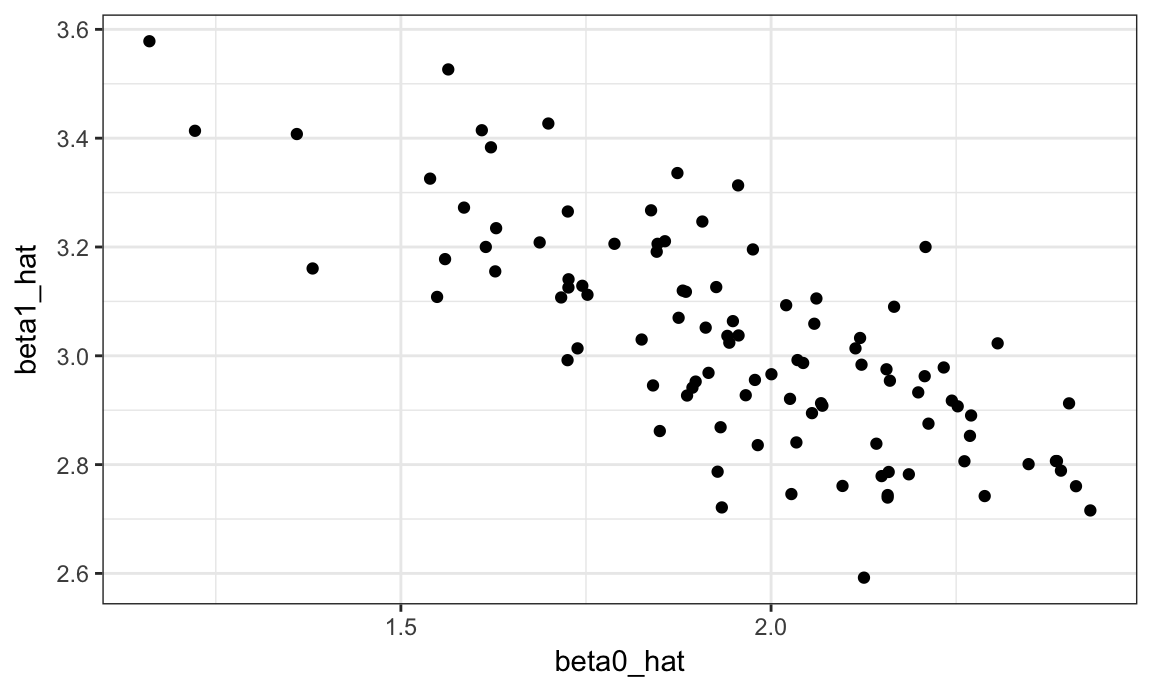

Let’s make some quick plots and compute some summaries for our simulation results.

sim_results %>%

ggplot(aes(x = beta0_hat, y = beta1_hat)) +

geom_point()

sim_results %>%

gather(key = parameter, value = estimate, beta0_hat:beta1_hat) %>%

group_by(parameter) %>%

summarize(emp_mean = mean(estimate),

emp_var = var(estimate)) %>%

knitr::kable(digits = 3)| parameter | emp_mean | emp_var |

|---|---|---|

| beta0_hat | 1.955 | 0.069 |

| beta1_hat | 3.026 | 0.040 |

This is great! We’ve seen how our estimates are distributed under our simulation scenario, and can compare empirical results to theoretical ones. In this way, we can build intution for fundamental statistical procedures under repeated sampling in a way that’s not possible with single data sets.

Simulation: SLR for several \(n\)s

Sample size makes a huge difference on the variance of estimates in SLR (and pretty much every statistical method). Let’s try to clarify that effect through simulating at a few sample sizes.

I’ll start this process with a for loop around the code I established above using rerun (I could start from scratch by nesting one for loop in another for loop, but let’s not).

n_samp = list(30, 60, 120, 240)

output = vector("list", length = 4)

for (i in 1:4) {

output[[i]] = rerun(100, sim_regression(n_samp[[i]], 2, 3)) %>%

bind_rows

}After this loop, output is a list of 4 data frames; each data frame contains the results of 100 simulations at different sample sizes.

Before we spend time looking at the results of the simulation, let’s recast this using map. I want to use a single function in my call to map_df, so I’m going to write a wrapper for the call to rerun that allows me to change the parameters of the simulation (i.e. the argument sim_regression) and the number of simulation replicates (i.e. the first argument to rerun). Once I have this, I’ll call map_df to perform the complete simulation.

simulate_n = function(n_rep, n_samp, beta0, beta1) {

rerun(n_rep, sim_regression(n_samp, beta0, beta1)) %>%

bind_rows()

}

sim_results =

map_df(n_samp, simulate_n,

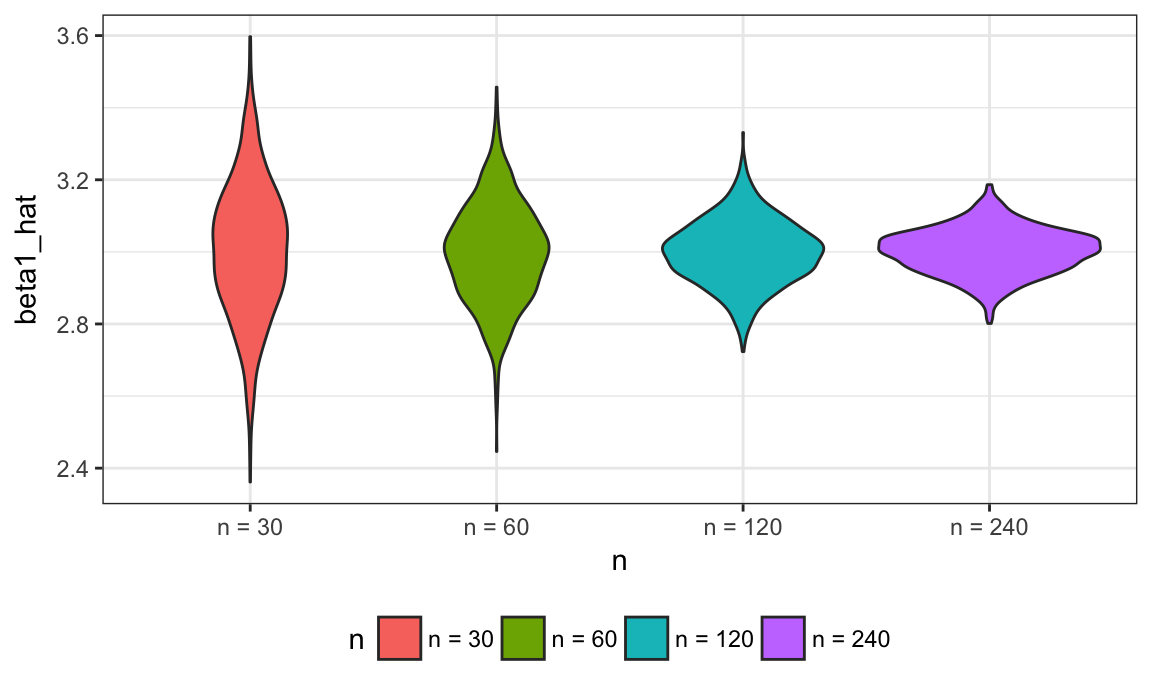

n_rep = 1000, beta0 = 2, beta1 = 3) Let’s take a look at what we’ve accomplished in our simulations! First I’ll take a look at the distribution of slope estimates across sample sizes.

relabel_function = function(n) { str_c("n = ", n) }

sim_results %>%

mutate(n = as.factor(n),

n = fct_relabel(n, relabel_function)) %>%

ggplot(aes(x = n, y = beta1_hat, fill = n)) +

geom_violin()

These estimates are centered around the truth (3) for each sample size, and the width of the distribution shrinks as sample size grows.

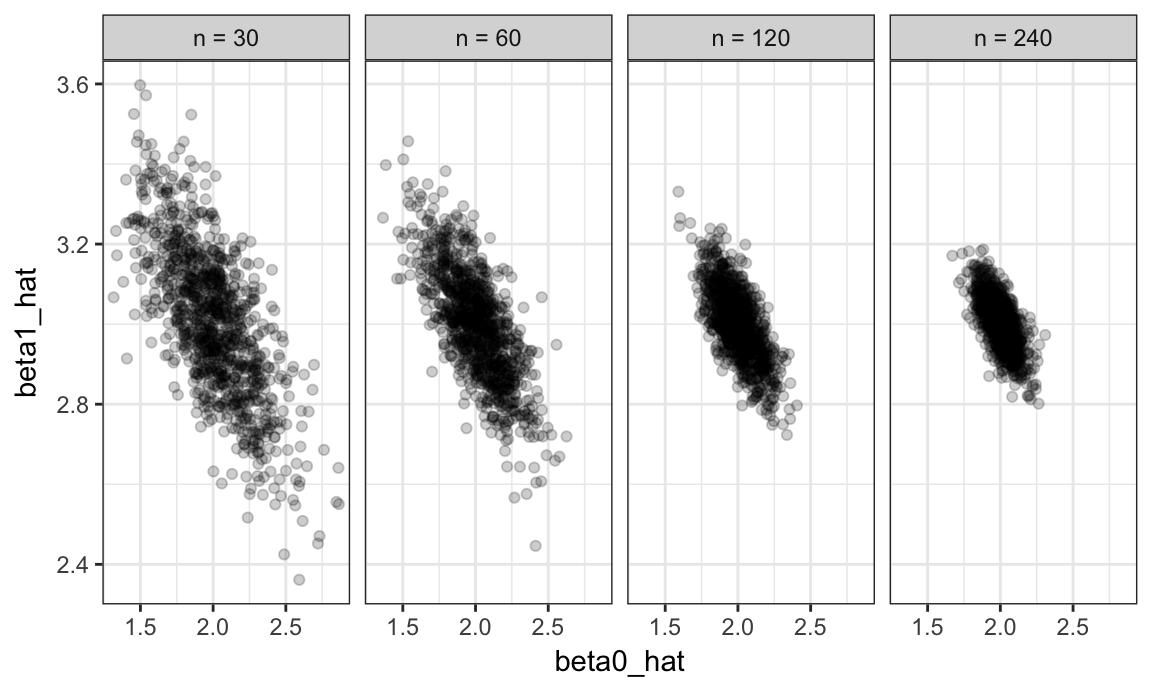

Next, I’ll look at the bivariate distribution of intercept and slope estimates across sample sizes.

sim_results %>%

mutate(n = as.factor(n),

n = fct_relabel(n, relabel_function)) %>%

ggplot(aes(x = beta0_hat, y = beta1_hat)) +

geom_point(alpha = .2) +

facet_grid(~n)

The variability in the slope estimates was shown in the violin plot, but now we have a sense for the bivariate distribution of intercepts and slopes. Estimates of the intercept and slope are correlated with each other; this is expected from theoretical results describing the joint distribution of estimated regression coefficients.

Lastly I’ll look at the empirical mean and variance of these estimates.

sim_results %>%

gather(key = parameter, value = estimate, beta0_hat:beta1_hat) %>%

group_by(parameter, n) %>%

summarize(emp_mean = mean(estimate),

emp_var = var(estimate)) %>%

knitr::kable(digits = 3)| parameter | n | emp_mean | emp_var |

|---|---|---|---|

| beta0_hat | 30 | 2.000 | 0.065 |

| beta0_hat | 60 | 2.007 | 0.038 |

| beta0_hat | 120 | 2.003 | 0.016 |

| beta0_hat | 240 | 1.999 | 0.008 |

| beta1_hat | 30 | 3.006 | 0.034 |

| beta1_hat | 60 | 2.997 | 0.019 |

| beta1_hat | 120 | 2.999 | 0.008 |

| beta1_hat | 240 | 3.002 | 0.004 |

These values are consistent with the formulas presented above. This kind of check is a useful way to support derivations (although they don’t serve as a formal proof in any way).

Other materials

Iteration and simulation can be tricky – the readings below will help as you work through this!

- The chapter on iteration in R for Data Science has a useful treatment of iteration using loops and

map - Jenny Bryan’s

purrrtutorial has a lot of useful information and examples - R Programming for Data Science has information on loops and loop functions; given Roger Peng’s tendency towards base R he focuses on

lapplyand others instead ofmap - This question and response on stack overflow explains why one might prefer

maptolapply

One of the best ways to learn about writing functions is to see what other people have done and try to figure out how it works. We’ve seen a couple of examples in other contexts that might be worth revising

- This app for understanding power uses

mapto generate power curves at various sample sizes - The R file used to create the

starwarsdataset (in thetidyverse) using the Star Wars API processes list output (from the API) using severalmapvariants

The code that I produced working examples in lecture is here.